If you look behind the scenes of Java Flight Recorder, there are commonalities with nanolog. The author wrote his doctoral thesis on the implementation. Nanolog does 80 million ops/s, with a median latency of 7 ns. For example, the single fastest logger is nanolog. I will frankly admit that there are several cool hacks involved in binary encoding that I wanted to try. I'd need a binary encoding that was concise and fast. If I encoded events, I could produce a stream of serialized entries, and I could compress all the entries and send them to off-heap memory where they wouldn't have to worry about garbage collection. I wish I could say that I was aware of all the issues at once, but the first thing I thought about was saving memory. But it required the application to know what was going on. I worked around the event/entry dichotomy by "dumping" events into another appender with a JSON encoder on request, and doing querying and serialization from there. If you wanted to open up the logs in an editor or save them off for later, the logs were gone. If there was a long running operation that exceeded the size of the in-memory buffer, the logs were gone. There were persistence issues: If the application crashed, the logs were gone. There were querying issues: because an event's markers and arguments can be of any type, there was no common format to read elements and properties. There were serialization issues: the logging event had no serializable format, so it couldn't be compressed, encoded, or transformed in any meaningful way.

Sqlite debug with db browser for sqlite full#

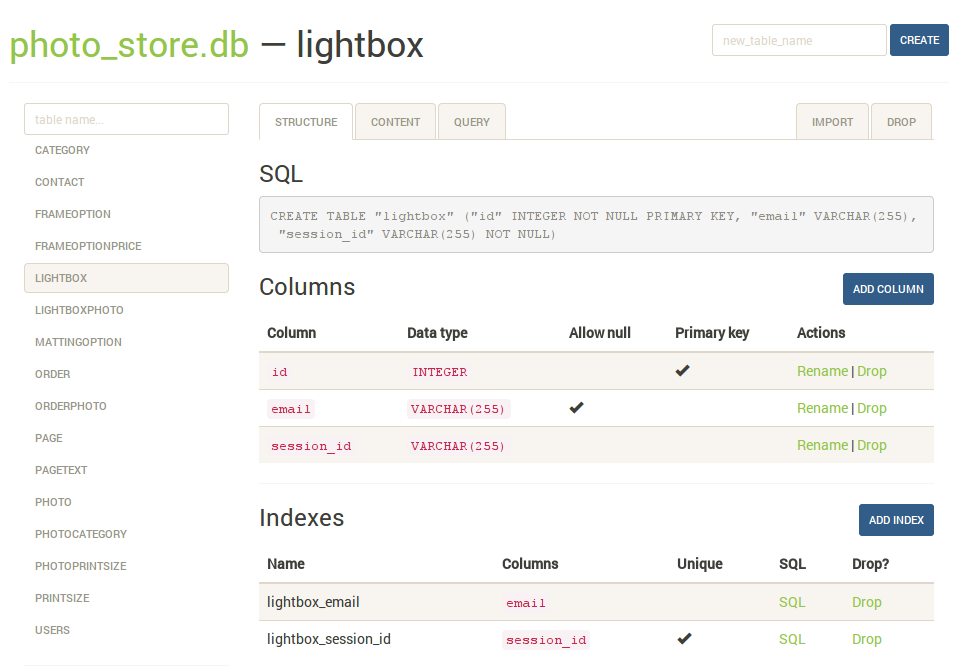

I was using an on-heap memory queue, and in a large queue objects were more likely to be tenured and go through full garbage collection. There were memory issues: the logging event could hold references to arbitrary objects passed in as input, meaning there could be memory leaks. This avoided the overhead of encoding every event, but also caused a number of downstream issues. The main issue was that I was using the raw input from the framework – the log event – rather than running the logging event through an encoder and producing a serializable output, the log entry. This was very fast and simple to implement, but there were some subtle and not so subtle issues with it. The first try was ring buffer logging, appending log events to an in-memory circular buffer using JCTools. Dissatisfaction with Ring Buffer Loggingīlacklite is the second try at writing a diagnostic appender. I think it's more interesting and human to talk about. The real story is messy, discovering the requirements piecemeal, and involves lots of backtracking over several months. I started off this blog post by writing out the requirements for a forensic logger as if I had total knowledge of what the goal was. Now let's do the fun question – how and why did it get here? And of course, SQLite has total support across all platforms, and works very well within the larger data-processing ecosystem.

Unlike flat file JSON, additional information can be added as ancillary tables in the database without disturbing the main log file, allowing queries by reference outside the original application context. They are as easy to store and transport as flat file logs, but work much better when merging out of order or interleaved data between two logs.

A vacuumed SQLite database is only barely larger than flat file logs. Queries are faster than parsing through flat files, with all the power of SQL. SQLite databases are also better log files in general.

Archiving ensures that the database size is bounded and will never fill up a partition. Logging at a DEBUG level in your application using Blacklite has minimal overhead on the application itself. There are no centralized logging costs involved – no indexing, no storage costs, no Splunk licenses or Elasticsearch clusters. Because Blacklite uses SQLite as a backend, the JSON API lets the application search for relevant debugging and trace entries – typically by correlation id – and can determine a response.įor example, you may aggregate diagnostic logs to centralized logging, attach it to a distributed tracing as log events, or even just send an email – see the showcase application for details.ĭiagnostic logging has no operational costs, because it's local and on demand. When things go wrong and you need more detail than an error log entry can provide, Blacklite can reveal all of the messy detail in your application. Overviewīlacklite is intended for diagnostic logging in applications running in staging and production. It has good throughput and low latency, and comes with archivers that can delete old entries, roll over databases, and compress log entries using dictionary compression compression that looks for common elements across small messages and extracts it into a common dictionary. TL DR I've published a logging appender called Blacklite that writes logging events to SQLite databases.

0 kommentar(er)

0 kommentar(er)